The PATTERN project uses state of the art technology to improve road infrastructure management. The innovative technologies include:

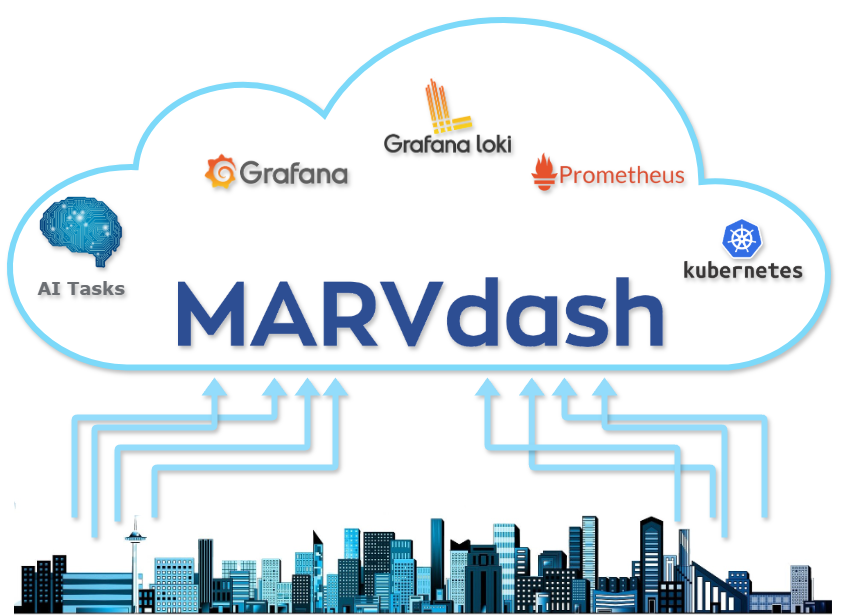

MARVdash

MARVdash is a cloud-based dashboard designed to manage the deployment of services in cloud environments. Serving as a centralised interface, it enables the orchestration of containerised applications and the configuration of services through a user-friendly web platform. Cameras act as data input sources, with all other components, including data processing and task execution, deployed entirely in the cloud. MARVdash optimises resource allocation and scheduling within the cloud, ensuring that complex AI tasks, such as video anomaly detection, are executed efficiently while maintaining scalability and performance.

Built using Python and the Django framework, MARVdash integrates robust tools like Grafana for performance visualisation, Prometheus for metrics monitoring, and Loki for centralised log aggregation. Deployed on Kubernetes within a cloud environment, it simplifies the management of distributed services by providing automated configurations, service templates, and real-time monitoring capabilities. By consolidating control and resource management, MARVdash enables seamless interaction between users and the PATTERN platform, optimising the deployment and execution of services while leveraging the scalability of the cloud.

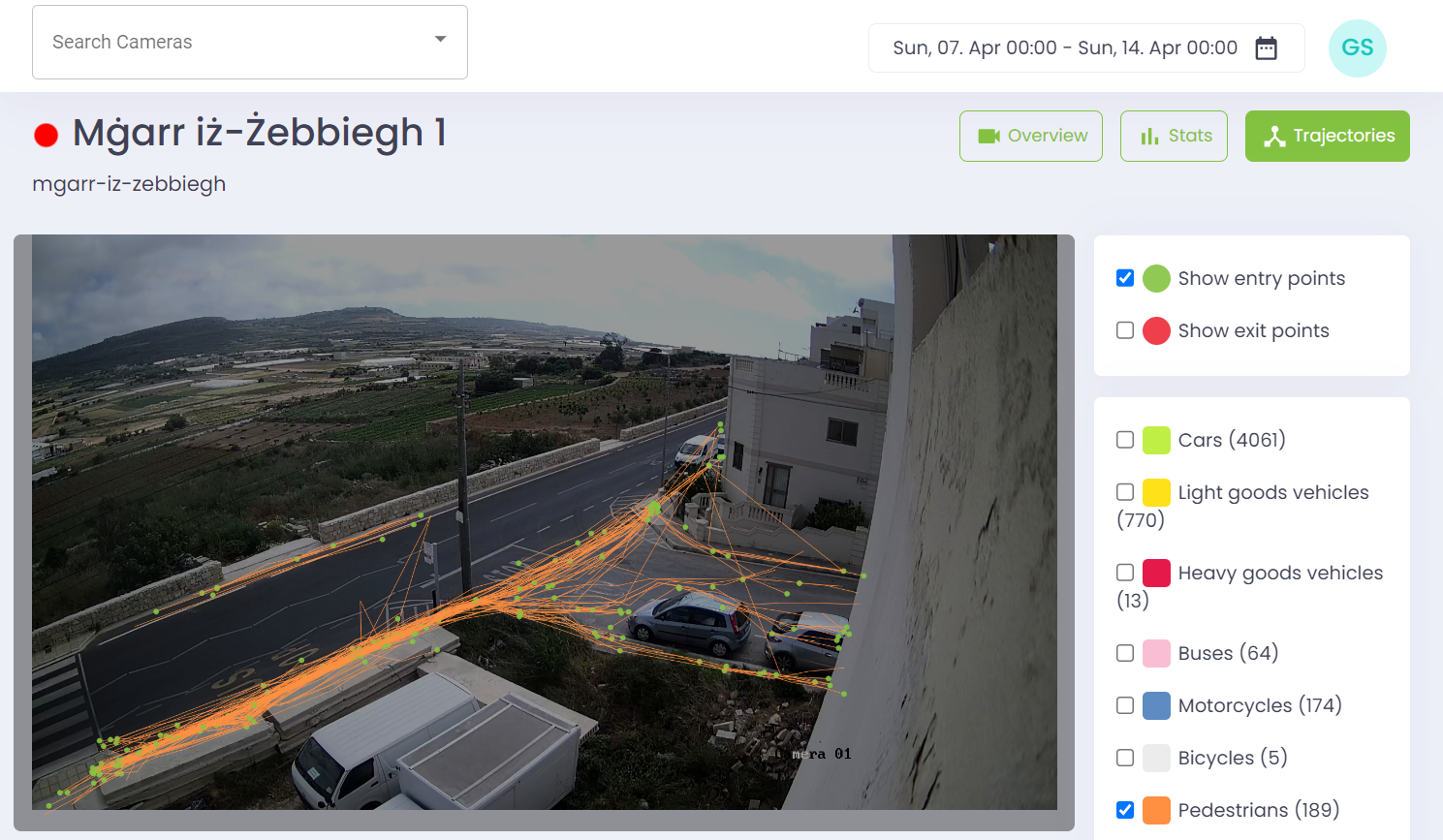

CATflow

CATflow is a software component that uses GPU resources to detect and track vehicles and pedestrians from a fixed real-time camera.Entry and exit lines are used to monitor traffic flow; it records the time of entry and exit, trajectory, speed, and vehicle type. This data is then sent through Kafka, which improves scalability, and can be used to generate reports and visualisations.

The AI component of CATflow uses machine learning to identify road users and then analyses the data to track when they enter and exit the camera’s view and trajectory they follow. This information is anonymised, as video frames are discarded after analysis, and individual users cannot be tracked once they leave the frame.

Audio Visual anonymisation tools

In today’s data-sensitive landscape, Videoanony and Audioanony are essential tools for enabling effective surveillance and audio-visual processing while upholding individual privacy. Both tools have been developed under the MARVEL H2020 project.

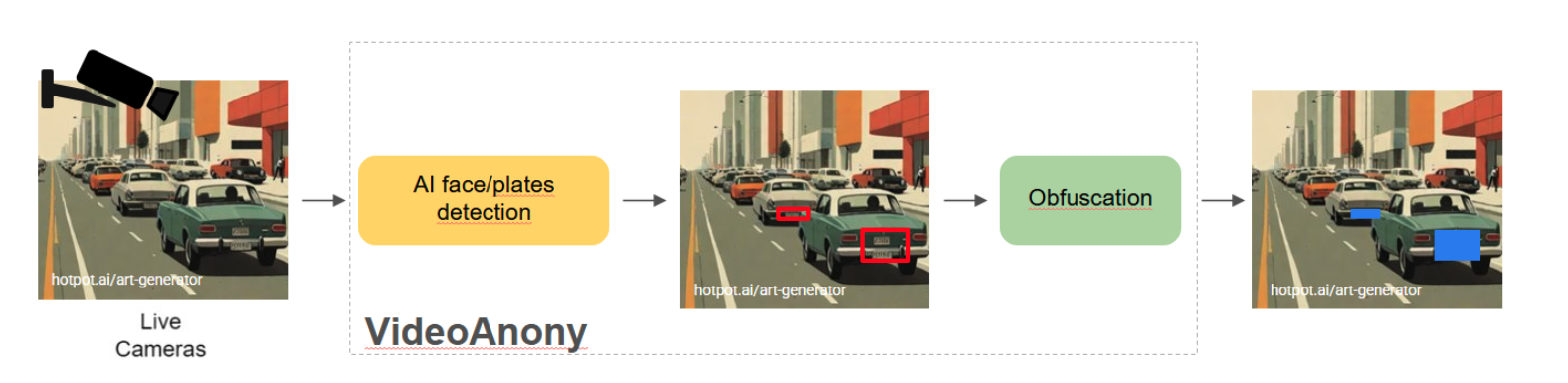

VideoAnony

Videoanony is a cutting-edge Machine Learning tool designed to obfuscate personally identifiable data in images and videos, ensuring compliance with GDPR regulations while enabling the secure use of video footage. Using a fixed camera streaming video of a bustling street, Videoanony’s advanced detection algorithms accurately identify and localize faces and vehicle license plates in the footage. Once detection is complete, the obfuscation phase seamlessly blurs these elements, effectively safeguarding identities and personal data. The processed video retains the original scene’s context, prioritizing privacy without compromising visual integrity, and is made available as a streaming output for further use.

Videoanony is a standalone solution that integrates easily for real-time processing of streaming videos at up to 20 frames per second. Leveraging the latest GPU acceleration, it delivers high performance with a minimal footprint and can be deployed on-premises, eliminating the need to send sensitive data to the cloud.

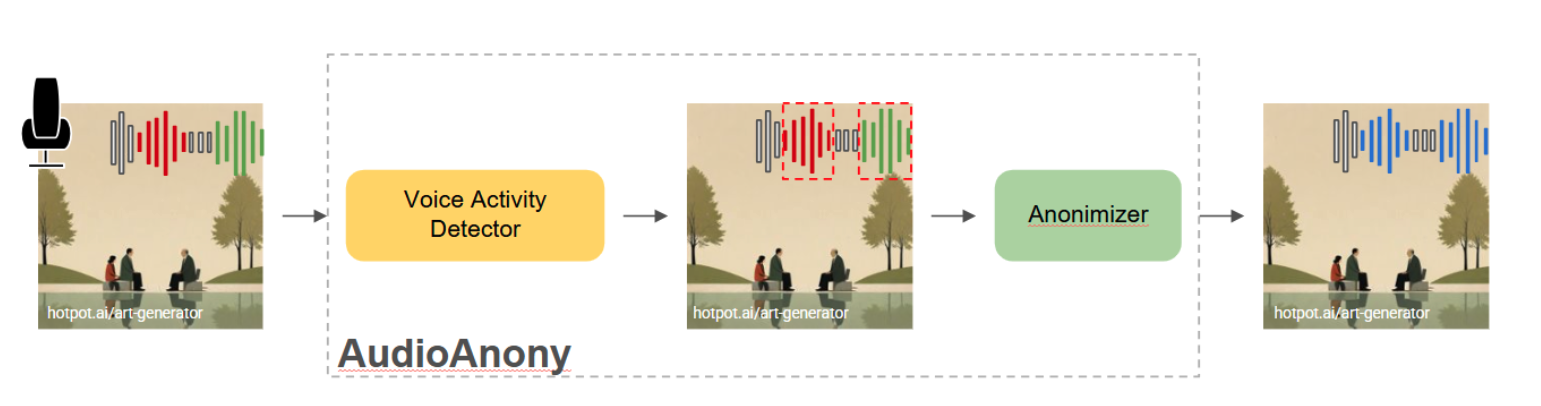

Audio Anony

AudioAnony is the speech counterpart of VideoAnony. Its goal is to camouflage human voices to mask the speaker identity while preserving the surrounding acoustic content. Being based on a lightweight voice activity detector and signal processing voice camouflage, the tool can be easily deployed on low-end devices without computational requirements (as a Raspberry PI).

The tool receives as input the raw audio stream and provides as input the stream with masked voices.